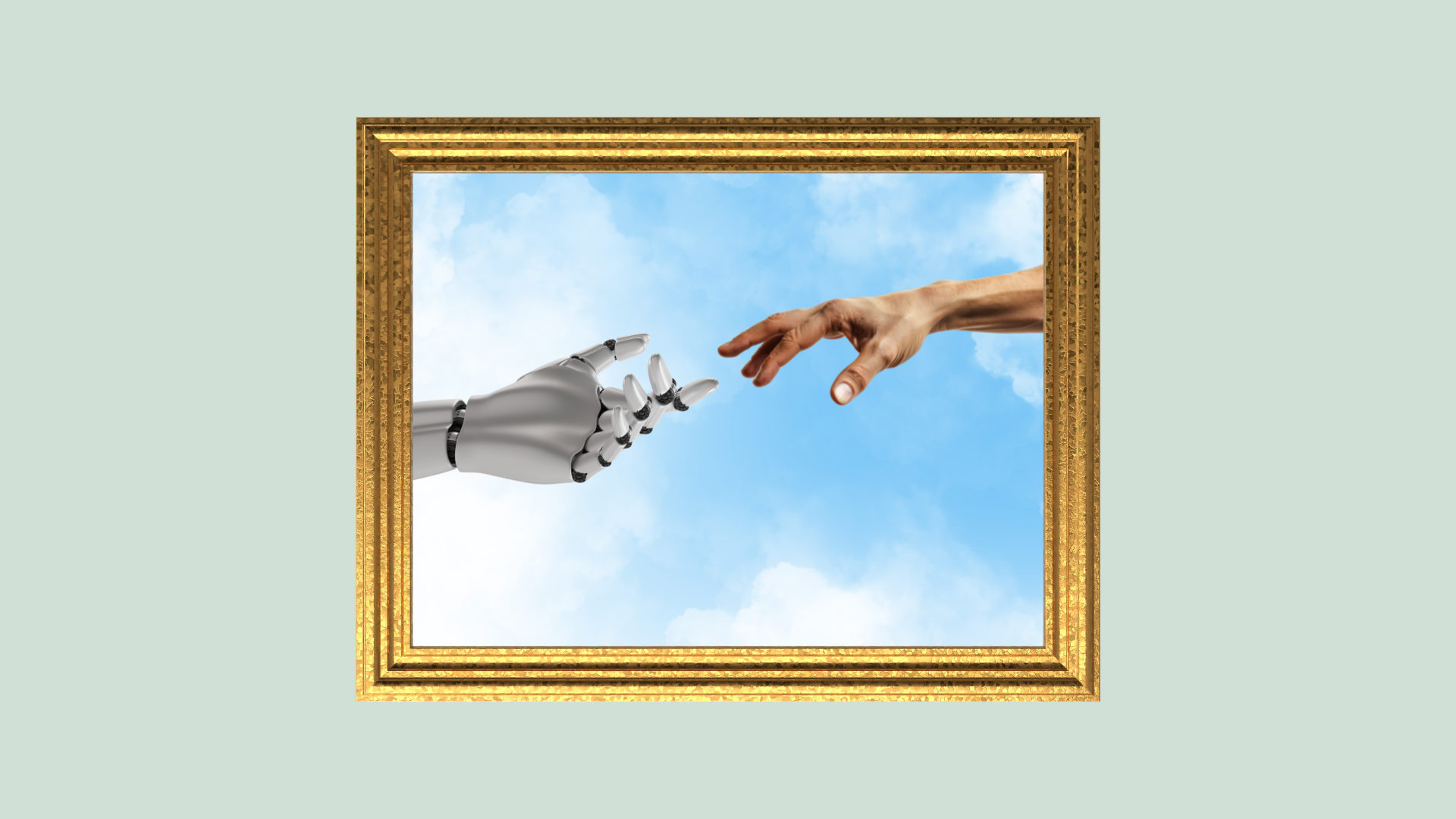

Creation of AI-dam. Graphic by Anna Gritzenbach.

TESSA HAMILTON | OPINION COLUMNIST | tehamilton@butler.edu

Artificial intelligence (AI) usage in academics, art and everyday life is becoming a more well-known issue. Naturally, there is frequent discourse over if AI is ethical, and where the line is drawn between what is morally permissible and what is not.

Honestly, you’ve probably been using AI far longer than you’ve even noticed. While generative AI is the major point of contention right now, predictive AI is definitely the more commonly used domain. Predictive AI takes data and uses repeated patterns and information to predict how the rest of the data will pan out. It’s often used as a way to analyze inventory, anticipate supply and demand and predict future sales. It’s also routinely used in the medical industry.

Predictive AI comes with its own problems, however. The most notable of these is an increase of biases in data by massively magnifying it. This often leads to ethical concerns. The goal in the use of predictive AI in college application software is to eliminate human bias, such as gender or racial bias, but it often amplifies it.

Dr. Kelly Van Busum, an assistant professor of computer science and software engineering, works with and researches mitigating biases in predictive AI models. She shared that AI often reflects the data used to train it, and warned against trusting it blindly.

“We know that AI can reflect human biases, but it does them much faster and at a much larger scale,” Van Busum said. “AI can also add additional bias into society, and this is a really hard problem to solve, because AI is, in a lot of ways, a black box. Even the people who program it don’t necessarily know what’s happening inside of that black box, because the math can be so complex. What I try to work on is a way for people and AI to work together so that people can tweak the AI to make sure it’s giving them the results that they consider best.”

On the other hand, the purpose of generative AI is to take previous information on the Internet, learn from it and create brand-new content. Obviously, this concept seems exciting when we consider the potential great things that could be created with this — making art of whatever we want or asking any question we can possibly conceive — but there are a few ethical concerns that have risen up with the usage of it.

Generative AI uses up a great deal of electricity. Data centers where servers and equipment are kept demand a high amount of electricity, and these data centers are rapidly increasing in numbers. These data centers alone may use more resources than whole cities, and renewable energy sources cannot meet the demands these centers require, causing them to use non-environmentally friendly sources, such as fossil fuels.

Furthermore, AI art is a subject with growing tension. Many artists claim AI art is taking away jobs from real artists by creating products for free or for far less money. Art used to train AI models can be taken from artists’ posts online without consent.

While AI still lacks a human quality that makes it seem like real art, and frequently messes up details such as hands and words, the potential of an artist’s own style being able to be replicated so easily and inexpensively leads to some moral questions.

Ellie Propsom, a first-year history major and former art major, expressed her belief that generative AI can be “dangerous territory.”

“Personally, as someone that was an art major … I think there’s a lot of legal issues when it comes to generative AI,” Propsom said. “A lot of times companies can start using it in place of actual artists. I think a lot of times real art gets taken into the AI and used without any consent and stuff like that.”

AI brings additional ethical concerns to academic settings. How many times have you been sitting in a workspace or class and heard someone advise “Just ChatGPT it,” in response to a question on an assignment? Butler Information Technology recently sent out an email updating students with information on generative AI. In addition, class syllabi now have an “Artificial Intelligence” clause when discussing cheating. As AI models become increasingly used in academic and professional settings, more policies and student rules must be updated. However, this is proving to be difficult to manage as AI is rapidly evolving.

Senior computer science major Noah Baker weighed in on AI’s frequent misuse, as well as the unintelligence of artificial intelligence.

“I think that [AI] is a good tool for outlines and things that you would let a fifth grader do,” Baker said. “[It’s destructive by] telling you every single thing about a subject so that you don’t learn it yourself.”

The ethics of AI are questionable on a much more individual scale as well. There are a multitude of examples where AI presents the user with concerning information and advice, as seen with an AI chatbot from Google responding to a user by telling them to die, or with Google AI overview providing shockingly dangerous advice. Users have begun consulting AI and making new models for just about anything you can think of, including but not limited to an AI Jesus in a Swiss Church.

I’m not going to pretend to be morally superior to those who use AI — I can’t even claim that I haven’t used it before. However, it is essential for us to recognize the dangers and ethical concerns that come with it.

Personally, I feel much more comfortable with using predictive AI over generative, although I try to limit my usage of it overall. I, for one, do not want to ever become fully reliant on digital assistance and intelligence. With this being said, I want AI to be something to complete the mundane tasks in my life, like doing dishes or making my bed, not something that overtakes my learning ability and passions.

While AI can be a useful tool when used cautiously and ethically, we as a society need to stop trusting it blindly and consider the impacts of our usage of it — especially in the context of generative AI.

And no, just because I use big words doesn’t mean I used ChatGPT — I just know my way around a thesaurus.